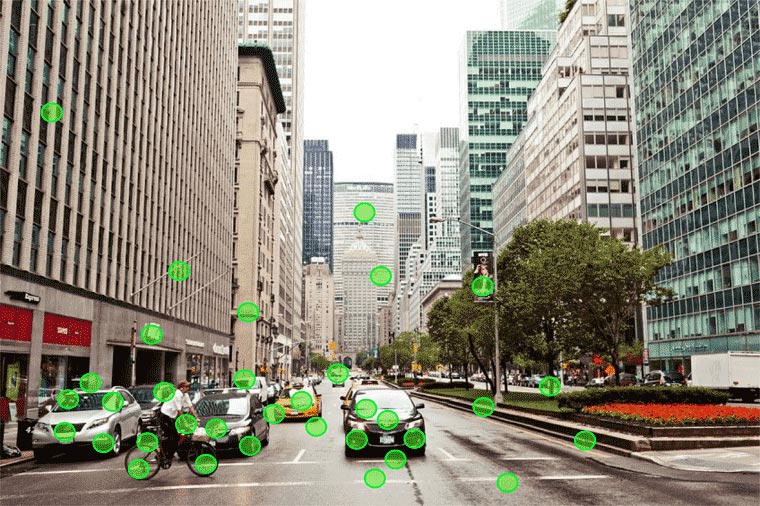

Object identification and tracking in a sequence of frames (video) consists of sampling of the scene, by e.g raster or uniform scatter, to extract features and compute their descriptors for target objects identification. This raster scanning procedure can by resource intensive, especially if every (or almost every) pixel in the image needs to be examined; hence this poses as a bottle neck for real time applications. Although in sparse image application only points of interest (such as edges) are to be examined, full image operations are still utilized (gradients or extracting corners), which requires information from all pixels. Heavily crowded scenes, such as urban landscape, containing pedestrians, cars and traffic signal, need to be identified and tracked by an automated driving assistance system (ADAS) and integrated, to be able to issue a timely response. However, for some applications, sampling space can be reduced if the temporal link between the expected successive locations of objects is retrieved.

.

.

The goal, therefore, is to dramatically reduce the image sample by predicting which pixels to sample on which we compute features and descriptors for further analysis, identification and tracking. The mathematical tool which deals with inference of the conditional (temporal) link between successive distributions of events (points related to target object at any time) is called temporal point processes. Temporal point processes are stochastic realizations of points scattered in space at time ti, having an intensity (loosely, the expected number of points per unit measure) conditioned on the intensity (or distribution) in the previous time step ti-1. The distribution of points is unknown a priori and needs to be estimated from the data.

.

.

Several types of temporal point processes are useful for object points (events) identification. For example, the self-excitatory process (Hawkes process, which is also used to model location of residual secondary earthquakes after a large shock) increases the sampling of points near a successful event (identification of object point) in subsequent time steps, thus providing more “relevant” samples in the vicinity of the object to be extracted. On the other hand, self-inhibitory processes diminish the number of points in the vicinity of “failed” or irrelevant events (non-object sample point), thus reducing the sample space where it is not needed.

.

We therefore arrive at the crucial point: how to retrieve such dynamic sampling based on temporal point processes. Such non trivial relationship must be learned and adapted dynamically from the data. We find the solution in Machine Learning methodologies, the goal of which is to continuously update the conditional intensity of the point process based on its history. The parameters of the conditional intensity of our point processes (or mixed inhibitory-excitatory process) can be obtained by minimizing log likelihood, thus obtaining the maximum likelihood estimators.

.

In the learning phase, we need to feed our network (e.g. recurrent neural network) with identified object points in each frame. Special attention must be given to applications for which the view point is static (e.g. security cameras) vs. dynamic (e.g. dash cameras in cars). These cases force the restriction that sampling process should converge to either the homogeneous Poisson point process (constant intensity) in the case of no temporal correlation, or to the excitatory-inhibitory mixed distribution (non-homogeneous), when object identification has some certainty. The use of cutting edge methodologies in computer vision and machine learning has been the everyday practice at RSIP Vision for years. Our engineers develop and implement state of the art methodologies to provide our clients with the most advanced and stable solution for their projects. To learn more about RSIP Vision’s activities in a wide range of industrial domains, please visit our project page.