Arie Rond and David Menashe

Artificial intelligence (AI) is already transforming healthcare, enabling capabilities that seemed unattainable a decade ago. Now, a new frontier, generative AI (GenAI), promises to bring revolutionary advancements to the ever-evolving field of medicine.

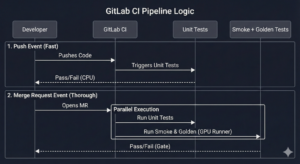

The emergence of GenAI mirrors the earlier adopton of deep learning in healthcare. When deep learning began transitioning from less regulated domains to medical applications, it unlocked high-impact use cases, especially in the medical imaging field. Medical device companies quickly recognized its potential, leveraging the technology to enhance e.g. classification, segmentation, tracking, and navigation. While initial regulatory approvals were slow, they paved the way for broader acceptance. Today, numerous approved medical devices harness AI to power an extensive range of functionalities, and most companies in the sector are either using, implementing or considering AI solutions. This is well illustrated by the rapid growth of FDA approved AI enabled products, as shown in Fig 1

Figure 1: Number of AI/ML enabled FDA device per year (source, FDA)

So, what does the future hold for GenAI in medical imaging? While it’s difficult to predict how the medical device landscape will look like in the future, the remarkable capabilities of GenAI suggest it will play a significant role in improving patient outcomes and making healthcare more efficient and accessible. However, integrating GenAI into healthcare isn’t straightforward. Issues such as Hallucinations, expensive hardware requirements, and insufficient large-scale testing represent significant hurdles. In addition, significant technical expertise is required to develop GenAI imaging models.

Not all GenAI applications will face the same challenges. Some tasks already addressed by existing AI solutions can be further improved with GenAI. For example, segmentation tasks, which are now well accepted in clinical settings, often see additional improvements when using a GenAI model on the same data. An illustration of this can be found in the paper “MedSegDiff: Medical Image Segmentation with Diffusion Probabilistic Model”.

Another important area where GenAI can be relatively easily applied involves the generation of synthetic data to train non-generative legacy AI models. Since medical devices using these current generation models (such as the U-Net segmentation model) have already been approved by the FDA, it should be relatively straightforward to upgrade the models with synthetic data. This could prove crucial to solving the well-known problem of obtaining high quality training data for medical AI models.

As a first step, GenAI can be used to generate large quantities of un-labeled synthetic data, such as described in “Denoising diffusion probabilistic models for 3D medical image generation” published in Nature. This paper not only describes how to generate 3D synthetic CT and MRI data, but also shows how the data can be used to improve the results of a current generation segmentation model.

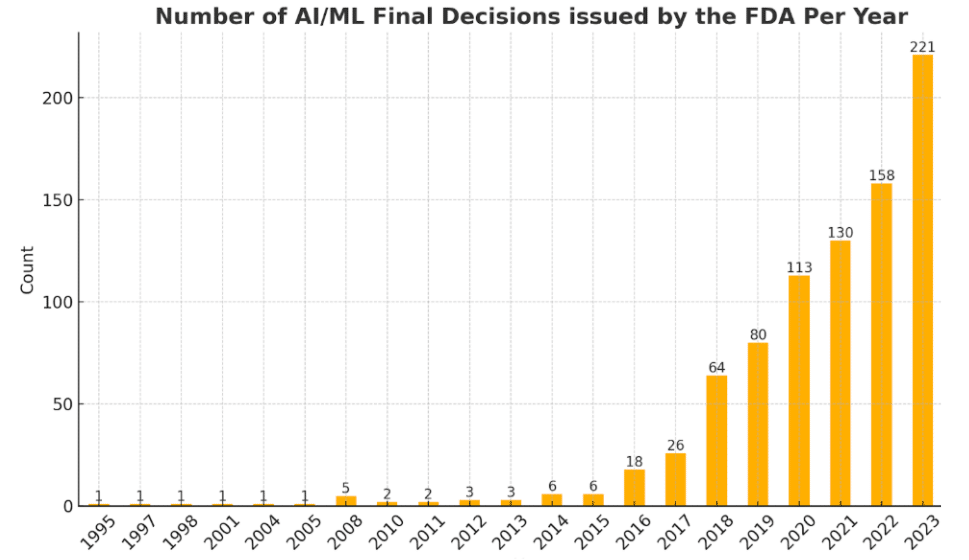

However, we can go one step further and generate labeled synthetic data using GenAI. Thus, not only do we overcome the challenge of obtaining training data, but we also significantly reduce the cost and effort required to label the data. This process consists of three phases, as illustrated in Fig. 2. First we use a small seed labeled dataset to train a GenAI model, which is then used in the next step to generate a synthetic labeled dataset. Finally, we use the synthetic labeled dataset together with the seed dataset to train the current generation (non-generative model). An important feature of this process is the ability to guide the synthetic data generation using text prompts. For example, if the current generation model performs worse on low contrast images, we can guide the GenAI model to generate more such images.

Figure 2: Generating synthetic labeled data for model training

The above process is well illustrated in a number of recent publications, such as “DatasetDM: Synthesizing Data with Perception Annotations Using Diffusion Models”, and “Synthetic vs. Classic Data Augmentation: Impacts on Breast Ultrasound Image Classification”. In the latter paper they show how the use of synthetic data improves the performance of breast Ultrasound tumor classification, typically considered a challenging task for AI models.

The next wave of GenAI innovation will likely involve larger foundation models trained on vast datasets and capable of handling diverse tasks. For example, segment anything type models allow segmentation of multiple anatomical elements without the need to develop specific segmentation models for each element. A good example of this is the MED-SA model, based on the well known SAM model by Meta (See Fig. 3). While regulatory approval for such models is more complex than for the applications described above, their potential impact is enormous. As of this writing, only one such model has been approved by the FDA.

Figure 3: Example of a “Segment Anything” GenAI model applied to a CT

In conclusion, the current state of GenAI in healthcare reflects the early stages of previously adopted AI technologies. Given its vast potential, it’s likely that GenAI will soon make its mark in medical imaging, driving innovation and improving patient care in the near future.