Although the robotic surgery arms have increased significantly the surgeons’ freedom of operation, an important aspect still remains in their full control: namely, the sensory feedback. Stereoscopic vision allows surgeons to estimate distances between instruments and tissue, and the slave-robotic arms translate surgeon’s crude movement to fine scale adapted to the size of the tissue. Despite that, a touch sensory feeling is completely missing from robotic surgery aids. To enable such sensory feeling, instruments need to be equipped with miniature touch sensors, which are currently expensive to integrate.

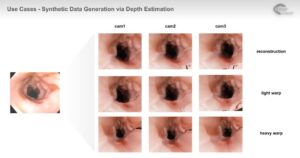

To obtain information about tissue deformation during surgery, both stiff instruments and soft tissues need to be tracked in real-time. In instrument tracking for robotic aided surgery, the characteristic of the targets are very diverse. Instruments have well defined geometry and a predictable relative position with regards to the camera. On the other hand, tissue structure can change its shape due to internal organ movement and its appearance due to lighting conditions and camera visibility. Once the surgeon applies force on a tissue using an instrument, tissue deformation needs to be estimated. The measure of deformation can be a valuable tool in estimating both organ properties and form as an initial step in the construction of visually-based sensory feedback for robotic surgery equipment.

To track tissues, a mesh-like model needs to be fitted to the visual data. Salient features in the target tissue are first extracted and the mesh model is fitted to it. Energy minimization methods can be employed to restrict the mesh from obtaining abnormal shapes, such as self-crossing mesh and sharp deformations. Salient features detection relies in part on illumination conditions. Tissue with high reflectance will tend to obscure salient features and will result in deteriorated mesh fit quality. Regulation of the illuminating source by its proximity to tissue will be dealt with in other articles. We assume therefore that illumination is regulated such that reflectance poses no serious setback and that the energy functional defined for mesh fitting can overcome such difficulties.

Since we are dealing with real time tracking, temporal information needs to be taken into account for mesh fitting. A mesh (for example triangulated) is composed of a set of points with position relationship between them. Such information can be used to regularize the mesh as it deforms with the tissue across frames. The mesh needs to disregard the position of the tracked instrument which should therefore be tracked accurately to prevent the mesh fitting from failing. The predicted shape of instruments such as robotic tweezers can be modeled beforehand and fitted to visual data at every frame. The interface between the instrument and tissue can be only indirectly deduced by the quantitative information obtained by mesh deformation after pressure is applied to the tissue.

Real-time tracking is one of the most common tasks in computer vision. In controlled environments such tasks are performed with high accuracy. However, real-life data, and especially those obtained in noisy or insufficiently lit endoscopic cameras pose serious challenges. The experienced team of engineers at RSIP Vision has been addressing such challenges with success for many medical applications. RSIP Vision has been operating in the field of computer vision for over 25 years. To learn more about how RSIP Vision’s algorithms can be used in robotic surgery and other medical applications, you can visit our projects page and also read more articles in Computer Vision News.

Surgical

Surgical