Tuesday

Tuesday

Phillip Isola

11

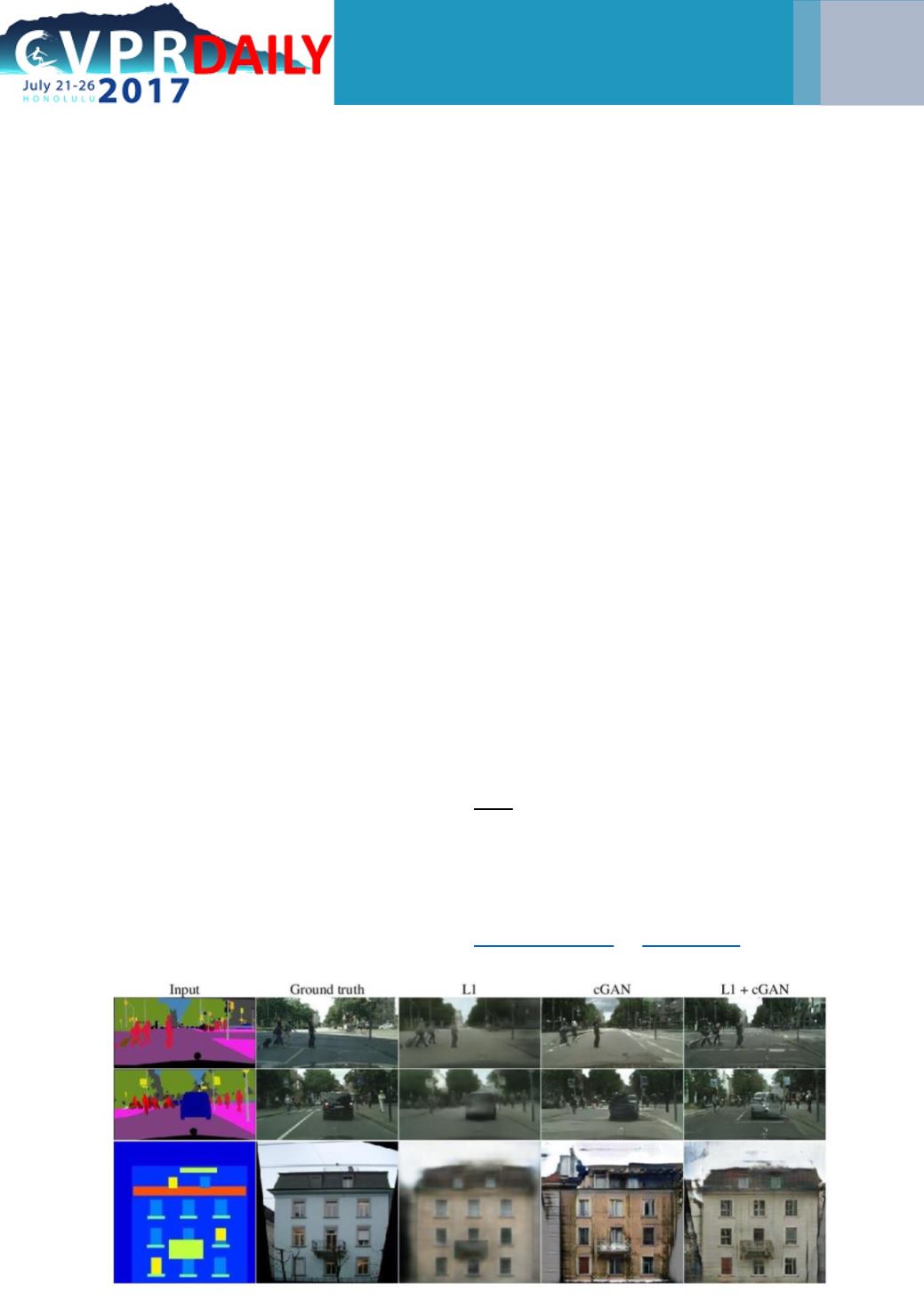

The final model therefore only has a

couple of tricks which turned out to be

necessary. One thing they found is that

while GANs are hard to optimise and

can be unstable in the unconditional

case, the conditional case is a lot more

constrained: the conditional color

distribution given a black-and-white

image has much lower entropy than

just the distribution over all possible

random images. Because you have

paired inputs-outputs, you are now

also in a supervised learning setting:

you can mix your GAN objective with

the more traditional

supervised

objective. That’s what they did in this

work - they added L1 regression as an

extra term in the objective to stabilise

things. This leads to faster convergence

and learning is more stable. “The nice

thing is that if you average in this L1

regression with a small weight, then it

doesn’t really change the final results”,

Phillip told us, “and you still get nice

clean GAN-quality results”.

For future work, Phillip thinks there is

still a lot of exciting things to do in the

conditional GAN setting for image-to-

image problems, and they already have

a follow-up paper, called CycleGAN.

Here they start with the observation

that in the conditional GAN setting

they needed paired supervised data.

Given coloured images for example,

they can train a mapping from black-

and-white to coloured images in a

supervised fashion, because there a

million images to use for this. But if

you want to learn a mapping between

two domains, like paintings and

photos, then you don’t know the

pairing. You might for example want to

learn the mapping from a photo to a

Monet-style image - but since these

don’t exist, this can’t be trained in a

supervised fashion. So without the

paired data, you can’t apply things

quite the same way. “But it turns out

that some small changes allow you to

also learn the mapping in the case

where you don’t have paired data, but

you just have two stylistically different

domains”, Phillip told us.

If you want to learn more about

Phillip’s work, make sure to visit his

poster (number 65) “Image-To-Image

Translation With Conditional

Adversarial Networks” today at 10:00.

TIP: ask him also about a fun tool

made by Christopher Hesse with their

code, for translating sketches of cats

into photos of cats.

Current paper CycleGAN