Vamsi told us that the motivation and

the context of his current work started

with the success of deep learning. He

describes deep learning as “a beast

which needs to be tamed”. That is, we

need machine learning and computer

vision tools which can decode the

learned deep neural networks. “There

is a lot of software which you can use

to train a good networks, but we also

need to understand what the deep

representations really mean, and their

relationship to human semantics”,

Vamsi said. His work contributes to

taking first steps towards that goal. It is

asking: given a trained network, what

interesting deep representations did

this network learn? Do the deep

networks see what humans see with

respect to different categories? Vamsi

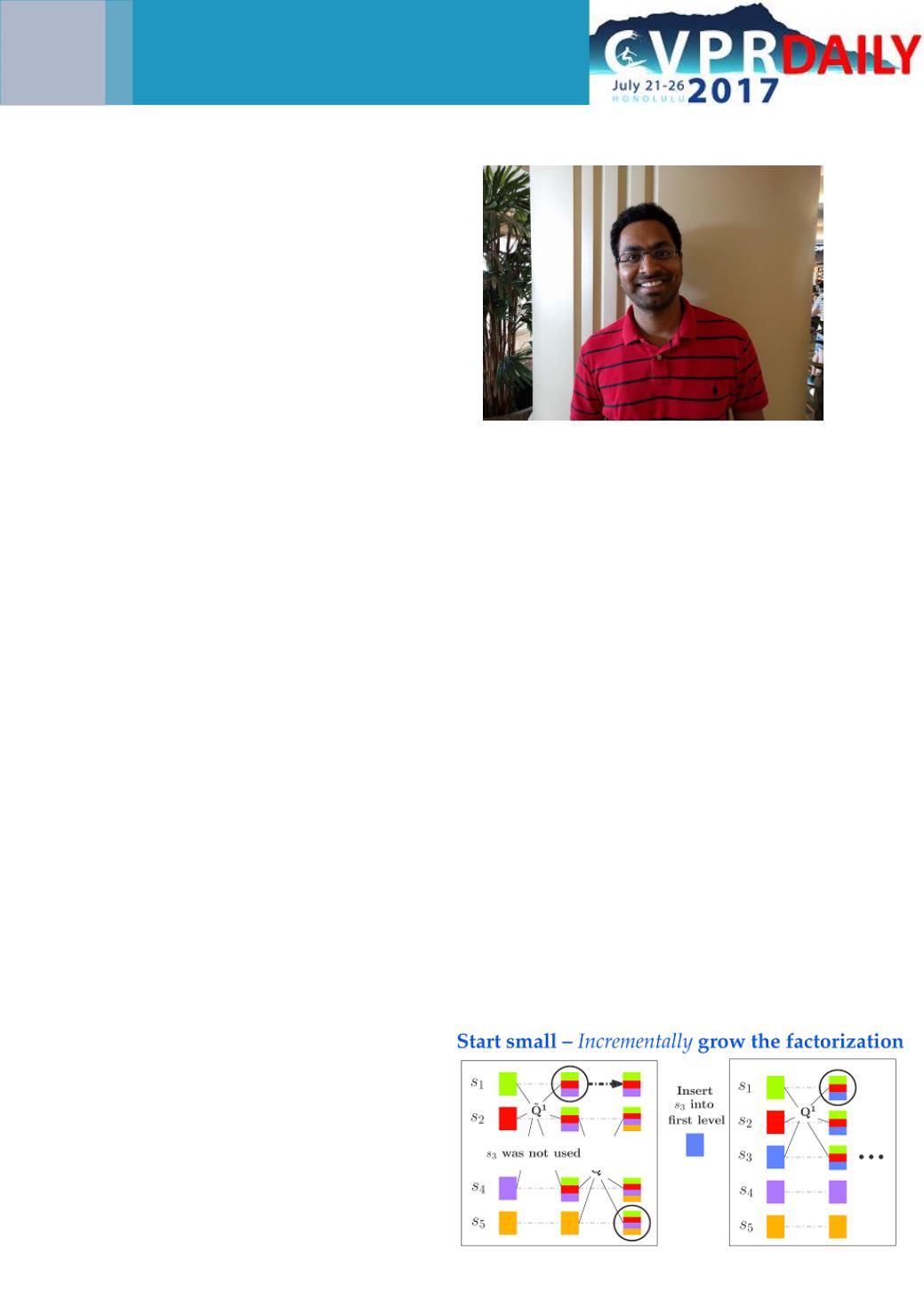

and his co-author’s approach to

answering these questions is to take

symmetric matrices from deep

network representations,

and

factorising the complex hierarchical

block structure in this data. The

existing tools for this, like PCA, use

low-rank and global methods and are

not adequate, Vamsi told us. Therefore

he proposes a novel factorisation,

which he calls

incremental

multiresolution matrix factorisation.

This is the first Mallat-style wavelet on

symmetric matrices.

Constructing

wavelets on matrices themselves,

instead of non-euclidean data like

grass and trees, is a relatively new

development, Vamsi told us. They

visualise the factorisation that this

method produces in a nice way, which

they called MMF graph.

If you want to learn more about

Vamsi’s work and see examples of

these visualisations, visit his talk titled

"

Decoding Deep Networks

" at the

Explainable Computer Vision workshop

on Wednesday, July 26. He will talk

about how to use the factorisations

they propose in their paper to study

deep neural

networks and the

evolution of semantics in a neural

network, and how these compare to

human semantics.

Vamsi Ithapu

26

TuesdayThe Incremental Multiresolution Matrix Factorization Algorithm

Vamsi Ithapu

is a PhD student at the

University of Wisconsin Madison

, and

he is working on explainability of deep

neural networks. His current work is a

joint work with

Risi Kondor

,

Sterling C

Johnson

and

Vikas Singh

.